Megan Jones

Police and Crime Commissioners (PCCs) were introduced in 2012, (2011 Police Reform and Social Responsibility Act), representing one of the most radical changes to governance structures in England and Wales. PCCs are directly elected by the public and their statutory functions require them to (1) hold their own police force to account on behalf of the public, (2) set the policing priorities for the area through a police and crime plan and (3) appoint a Chief Constable.

They replace the former Police Authority committee style structure, which was criticised for their lack of visibility and accountability to the public and communities they were designed to serve. The emergence of PCCs was therefore a result of the failings of the previous governance mechanism and a political shift of focus from national to local governance.

In my research, I look at the impact that PCC governance has on drug policy, using the West Midlands police force area as a case study. Drugs policy, and specifically a harm reduction approach*, is just one area of policing and priorities that was used to explore the statutory role of PCC and more broadly, how the role can be interpreted or used wider than its statutory framework.

In August 2019, the latest drug-related death figures were announced by the ONS. They are now the highest on record, with 4,359 deaths in England and Wales recorded in 2018 (ONS, 2019). In the West Midlands, there is a drug-related death every 3 days (West Midlands PCC 2017a). Over 50% of serious and acquisitive crime is to fund an addiction and the cost to society is over £1.4 billion each year (West Midlands PCC 2017a). This topic often divides opinion and can be politicised. However, these debates rarely prevent the considerable damage caused by drugs to often very vulnerable people and wider society. The official national response is focused on enforcement of the law, criminalising individuals for drug possession.

By interviewing a number of key actors within the drug policy arena and as leaders in policing both within forces and PCC’s offices, I looked at how the PCC structure can enable a change in policy. This was combined with desk-based document study of public available document into the drugs policy approach taken in the West Midlands. Four key themes were explored: the statutory role of the PCC; the individual PCC; governance and public opinion; and the approach taken.

My results showed that the PCC role and this new form of civic leadership benefitted from: convening power and their ability to draw upon key partners from across the public sector, lived experience, and third sector. This is an informal mechanism of governance strengthened by public mandate. PCCs have the ability to prioritise by setting their strategic priorities in the police and crime plan. For example, in the West Midlands, the approach to drug policy has been narrowed to focus on high harm drugs (heroin and crack cocaine), thus ensuring ‘deliverability’. This means that limited resources available are more narrowly focused and can have a greater impact. The statutory role of a PCC allows work at pace and decisions to be made quickly, which means that trial and pilot new approaches and innovations.

Of course, there are limitations. PCCs vary across the country and often do not speak with one voice, particularly on drug policy. There are also huge advantages of a good working relationship between Chief Constable and PCC, demonstrated through the joint approach in the West Midlands.

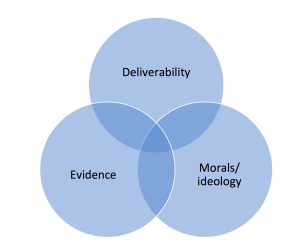

Figure 1: Drivers to drug policy, derived from the findings

My research allowed me to concluded that three key drivers are optimum for delivery of a PCC-led harm reduction approach: using the levers at their disposal, such as the statutory functions, and informal governance mechanisms, such as convening power, which are able to provide the strategic and political coverage required to deliver at pace.

PCCs are unique in the landscape of UK governance and whilst weaknesses in mechanisms designed to reign in their power could be viewed as worrying, in the drug policy space this has allowed for the development of a new approach in the West Midlands, one that is evidence-based and has the ability to save lives, reduce costs and reduce crime.

The potential of PCCs is arguably still being explored, but their ability to test new approaches and work effectively with partners will be essential in other areas of policy, such as the response to serious violence and the potential for an increasing role across the criminal justice system.

PCCs have a number of levers at their disposal, and are able to use informal and formal governance mechanisms to foster real change at the local level and drive forward evidence-based policy.

Megan Jones is the Head of Policy for the West Midlands Police and Crime Commissioner and is a former INLOGOV student, studying on the MSc Public Management programme. She tweets at @MegJ4289.

Carola van Eijk holds a position as a PhD-candidate at the

Carola van Eijk holds a position as a PhD-candidate at the